Goa is abuzz with excitement as vintage bike and car owners, users, collectors and fans are decking […]

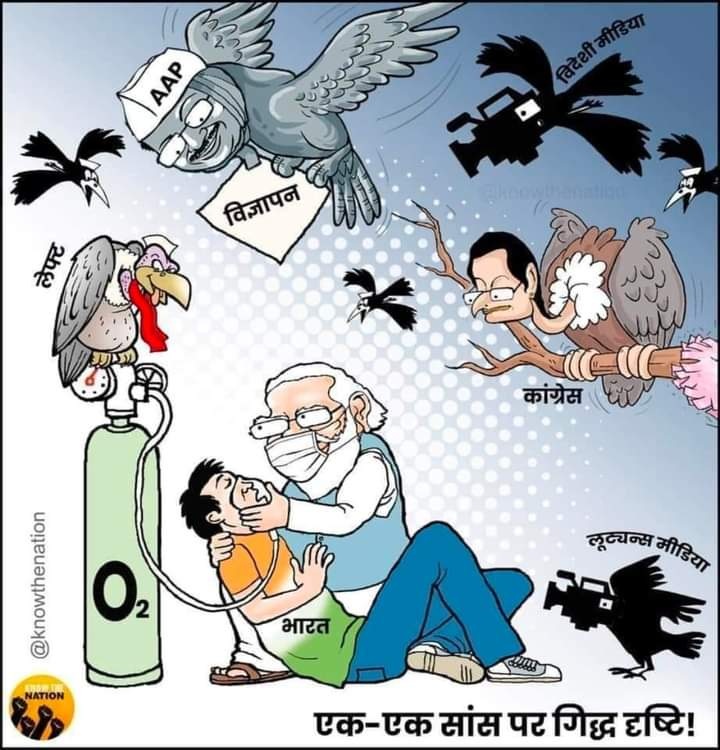

SC SCRAPS BAN ON COVID POST!

In the News, May 01- May 07, 2021 April 29, 2021REVERSED: Facebook has restored several posts demanding the resignation of Narendra Modi for mismanagement of the latest covid wave after removing them on the orders of the Central government.

By Wire Staff

The Supreme Court has dismissed the government directive to Facebook not to carry posts on the mismanagement of the latest covid wave calling for resignation of PM Narendra Modi…..

While Facebook had removed a post on a group, described as a forum for “Indian Muslims”, for violating its policy on ‘violence and incitement’, the Oversight Board has now over-turned the decision.

acebook’s Oversight Board has overturned a decision made by the social media giant to remove content in a group for “Indian Muslims” that was posted in late October 2020, around the time that French school teacher Samuel Paty was beheaded by an Islamist terrorist.

Referred to as experts as a quasi-Supreme Court, the board was created last year to carve out a new way for Facebook users to appeal content decisions on the social media platform, given previous criticism over how the company handles hate speech, violent extremism and graphic materials.

Decisions made by the 20-person board – which include several constitutional law experts and rights advocates, a Nobel Peace Prize Laureate and a former prime minister of Denmark – are final and cannot be overruled by Facebook CEO Mark Zuckerberg. The board’s members were appointed by a co-chair system – Facebook recruited four people (two American law professors, the former Denmark PM and a Colombian law school dean), who in turn appointed the rest.

The only Indian on the board is National Law School of India Vice-Chancellor Sudhir Krishnaswamy.

The watchdog’s first batch of cases included one from India, the decision for which was announced Friday evening.

The post in question contained a meme from a Turkish show (a character holding a sheathed sword) and a text overlay in Hindi that stated: “if the tongue of the kafir starts against the Prophet, then the sword should be taken out of the sheath.” The accompanying hashtags referred to French president Emmanuel Macron as the “devil” and called for a boycott of French products.

In early November 2020, Facebook removed the post for violating its policy on ‘Violence and Incitement’ – the company interpreted “kafir” as a pejorative term referring to nonbelievers in this context and concluded that the post was a veiled threat of violence against kafirs and removed it.

In its ruling, the board noted that a majority of its members noted that the usage of the meme, while referring to violence, was not considered as a call to “physical harm”.

“A majority of the Board considered that the use of the hashtag to call for a boycott of French products was a call to non-violent protest and part of discourse on current political events. The use of a meme from a popular television show within this context, while referring to violence, was not considered by a majority as a call to physical harm,” the case decision noted.

“In relation to Facebook’s explanation, the Board noted that Facebook justified its decision by referring to ongoing tensions in India…The Board unanimously found that analysis of context is essential to understand veiled threats, yet a majority did not find Facebook’s contextual rationale in relation to possible violence in India in this particular case compelling. “

What was the post and how did it get removed?

The case description notes that the post was published in late October 2020, in a public group that describes itself as a forum for “providing information for Indian Muslims”.

While neither Facebook nor the Oversight Board has provided the public with an image of the exact post, it is described as such:

After the post was published, it was reported by two different users – one for hate speech and the other for violence and incitement.

While Facebook did not initially remove the post, the board noted that the social media giant received information from a third-party partner that this content had the “potential to contribute to violence”.

The case outcome notes that this third-party partner was part of a trusted partner network that is “not linked to any state”, but was used as a way for the company to obtain additional local context about India.

“After the post was flagged by the third-party partner, Facebook sought additional contextual information from its local public policy team, which agreed with the third-party partner that the post was potentially threatening. Facebook referred the case to the Oversight Board on November 19, 2020. In its referral, Facebook stated that it considered its decision to be challenging because the content highlighted tensions between what it considered religious speech and a possible threat of violence, even if not made explicit,” the board noted.

Why was it taken down?

Facebook’s ‘Violence and Incitement Community Standard’ does not allow content that creates a “genuine risk of physical harm or direct threats to public safety”. This includes coded statements “where the method of violence or harm is not clearly articulated, but the threat is veiled or implicit.”

Based on these criteria, Facebook determined “the sword should be taken out of the sheath” was a veiled threat against “kafirs” generally. In this case, Facebook interpreted the term “kafir” as pejorative with a retaliatory tone against non-Muslims; the reference to the sword as a threatening call to action; and also found it to be an “implied reference to historical violence.”

The social media firm also told the board that it was crucial to consider the context in which the content was posted.

“According to Facebook, the content was posted at a time of religious tensions in India related to the Charlie Hebdo trials in France and elections in the Indian state of Bihar. Facebook noted rising violence against Muslims, such as the attack in Christchurch, New Zealand, against a mosque. It also noted the possibility of retaliatory violence by Muslims as leading to increased sensitivity in addressing potential threats both against and by Muslims,” the case notings said.

Why was it overturned?

According to the case decision, a majority of the oversight board noted that while the post referenced a sword, they interpreted the post to criticise Macron’s response to religiously motivated violence, rather than credibly threaten violence.

“The Board considered a number of factors in determining that harm was improbable. The broad nature of the target (“kafirs”) and the lack of clarity around potential physical harm or violence, which did not appear to be imminent, contributed to the majority’s conclusion. The user not appearing to be a state actor or a public figure or otherwise having particular influence over the conduct of others, was also significant,” the verdict noted.

“In addition there was no veiled reference to a particular time or location of any threatened or incited action.”

Interestingly, the oversight board also rejected Facebook’s claims that the Indian context was a factor in determining whether the content should be taken down.

A minority of board members disagreed with overall logic, noting that the Charlie Hebdo killings and recent beheadings in France related to blasphemy meant that this threat cannot be “dismissed as unrealistic”.

“The hashtags referencing events in France support this interpretation. In this case, the minority expressed that Facebook should not wait for violence to be imminent before removing content that threatens or intimidates those exercising their right to freedom of expression, and would have upheld Facebook’s decision,” the outcome decision noted.

Ultimately, however, a majority of board members found that Facebook “did not accurately assess all contextual information and decreed that “international human rights standards on expression justify the Board’s decision to restore the content”.

Coutersy: www. thewire.in